Can requirements-based testing be Automated?

Very few people love testing, but technology has come a long way since the days when tests needed to be written manually one by one. Requirements-based testing, however, tends to act as a sticking point when teams start to investigate how they can increase their levels of test automation.

With AI development progressing rapidly it is likely that one day machines will be able to comprehend software requirements written in natural language and create and run the necessary requirements-based tests. Unfortunately, this technology is still in its infancy. However, it is definitely still possible to increase automation and reduce the work involved in requirements-based testing.

If requirements-based testing is a lot of work then why do it at all?

To develop quality software

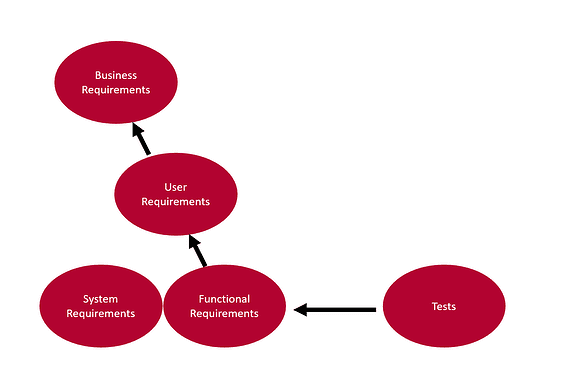

The purpose of requirements-based tests is to ensure that the software does what it was intended to do and nothing else. Testing against high-level requirements is vital to ensure that the software fulfills its purpose. Usually, tests are traced to more detailed functional requirements which in turn are traced to higher-level user and business requirements to demonstrate that the code has been implemented correctly.

To make sure requirements are well defined

Requirements-based testing also helps to ensure that the software requirements themselves are comprehensive and have been defined to a suitable level of detail. It is worth ensuring that requirements are well defined as this will avoid rework and make customer or stakeholder acceptance testing much smoother.

To comply with functional safety standards

Functional software safety standards such as IEC 61508 (industrial) and ISO 26262 (automotive) specify that requirements-based testing should be done. Aerospace standard DO-178 C identifies requirements-based testing as the very effective way to find errors and goes so far as to state that all tests must be requirement based!

Automatic Test Generation

When thinking about automating requirements-based testing a natural but erroneous conclusion is that tests need to be generated from requirements. However, there is an alternative approach that is much more feasible.

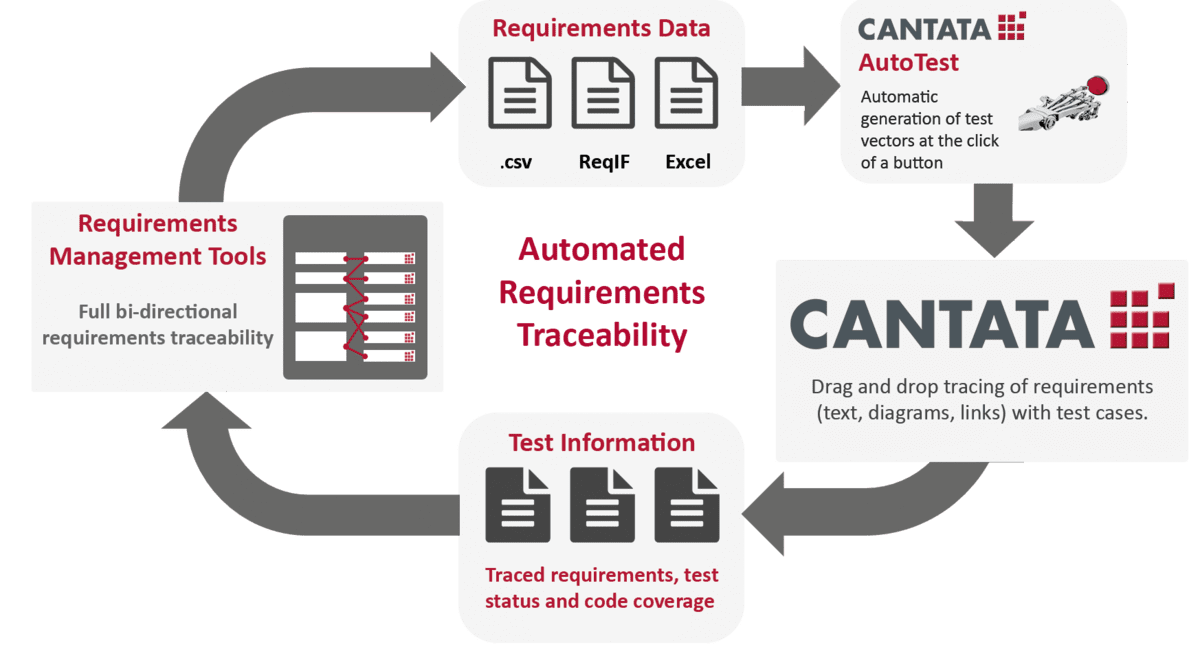

A test tool, such as the Cantata unit and integration testing framework which we will use as an example here, can auto-generate comprehensive test procedures from the source code. If clearly labeled these test procedures can then be traced to the requirements. This method is particularly effective for unit testing and can substantially reduce the effort involved in achieving 100% requirements coverage.

Automatic unit test generation with Cantata uses a feature called Cantata AutoTest. This parses source code to determine all possible paths through the code and then creates complete unit test scripts as required to exercise the code according to a given structural code coverage metric:

- Function entry point code coverage

- Statement coverage

- Decision coverage

- Modified condition and decision coverage (MC/DC)

(For more information on choosing a code coverage metric see our free white paper on Which Code Coverage Metrics to Use)

When tracing the auto-generated tests to requirements it is necessary to consider whether each requirement is completely and correctly verified by the test cases assigned to it. This step needs critical thinking so is not possible to fully automate. However, automation can make this manual activity easier.

AutoTest cases are generated with English test descriptions to make it easy to understand the unique path through the code that each test case verifies.

In addition, test tools can make this process smoother by providing:

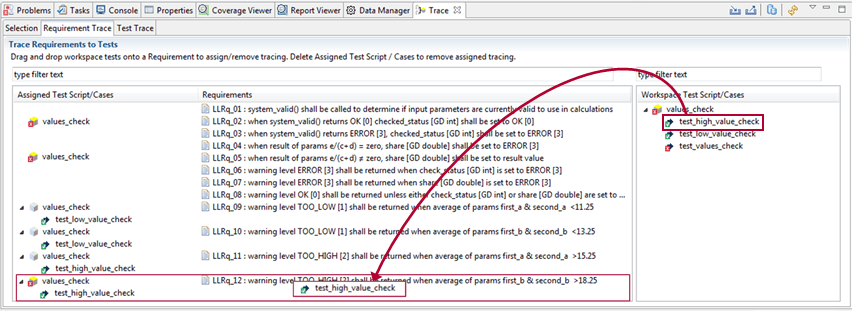

- A dedicated interface with all test and requirement data available in one location.

- An interface for easily establishing trace relationships between requirements and test cases.

In Cantata the Trace module provides both of these functions.

This process of matching auto-generated tests with descriptions to the appropriate requirements is significantly quicker and easier than splitting a requirement into low-level composite parts and manually writing tests for them.

After initial auto-generated tests have all been traced to requirements, it is beneficial if the workflow has a mechanism for managing changes to requirements and code. Automated processes and tools can also be used to highlight requirements changes, as well as to identify and refactor tests that are impacted by code changes.

A detailed breakdown of this process is available in these white papers (although they are tailored to specific standards the theory is applicable to any industry):